A Watershed Moment in AI

On March 14, 2023, OpenAI released their next generation GPT-based architecture and it was a giant leap from their GPT-3 model. They called it GPT-4 (0314).

The model was about an order of magnitude larger, and following Gwern’s scaling hypothesis, not only quantitative but also qualitative improvements emerged. It was suddenly able of:

Answering Theory of Mind questions

Solving complex coding problems (except for PowerBI’s DAX, which may be the final frontier for an ASI)

Sustaining longer, more coherent conversations compared to GPT-3.

OpenAI, however, has kept its research private, publishing only a technical report.

Another turning point

January 20, 2025 could be at least as significant as the release of GPT-4.

Enter DeepSeek, a Chinese company known for its “V” models in the AI-scene. On this date, they:

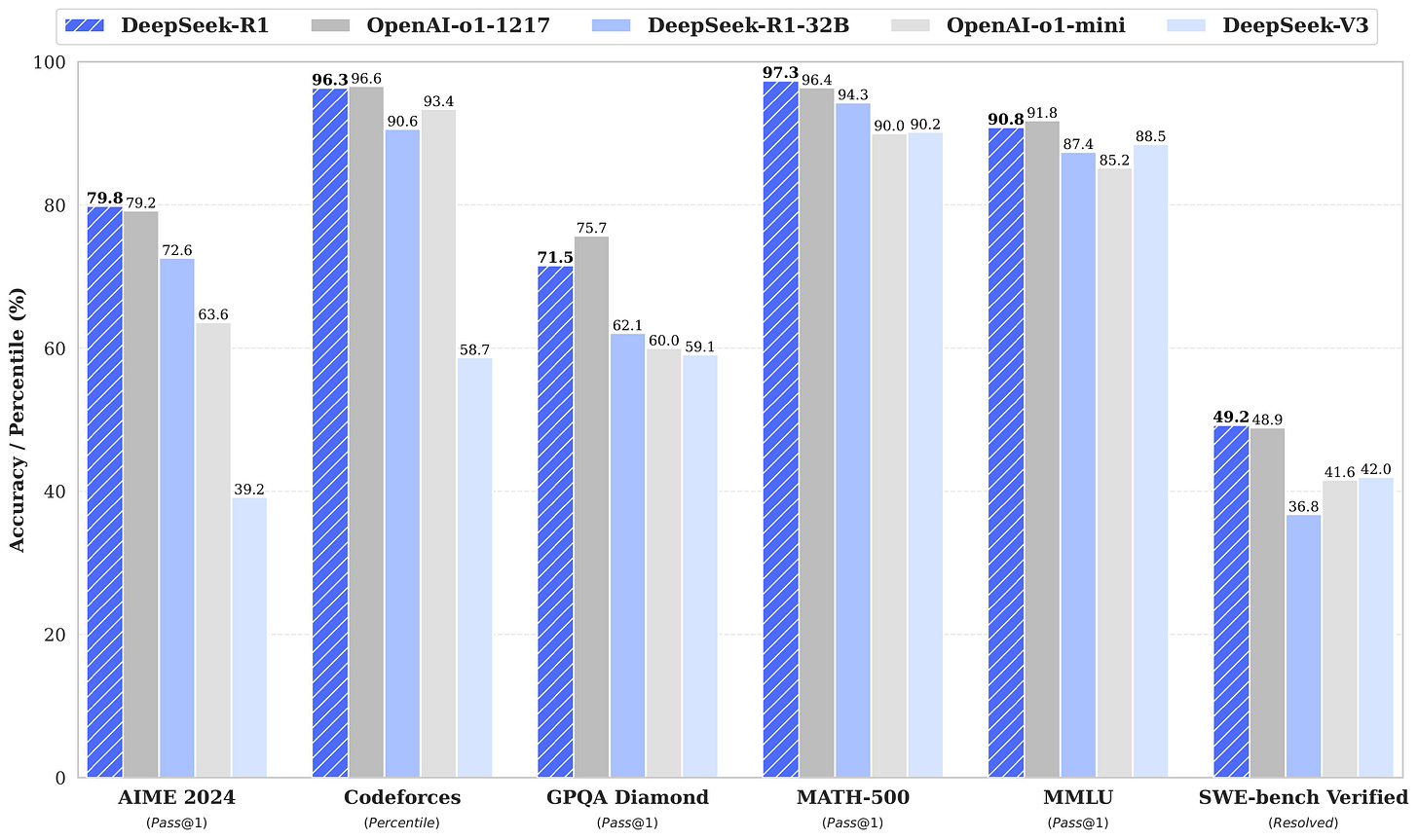

Released a SOTA-level reasoning model called “DeepSeek-R1”, comparable to OpenAI’s o1 and Google’s Gemini Experimental 1206.

Trained and operated on a fraction of the budget.

Published their research paper and several smaller, distilled models proving that their method works.

This is an impressive demonstration of the saying: necessity is the mother of invention.

How DeepSeek Did It?

The base “DeepSeek-V3” model with its optimized inter-GPU communication and Mixture of Experts (MoA) architecture, served as the foundation for training DeepSeek-R1-Zero via pure Reinforcement Learning (RL).

Using a novel method called Group Relative Policy Optimization (GRPO), DeepSeek-R1-Zero improved its reasoning capabilities without any supervised fine-tuning (SFT).

The genius of GRPO lies in relative evaluation: the policy network produces a set of outputs that are grouped together and evaluated relative to each other, rather than relying on a critic model.

This purely RL-driven approach allowed the model to autonomously develop sophisticated behaviors in its reasoning, such as:

Self-Verification: Checking its own reasoning steps for errors.

Reflection: Revisiting and refining its thoughts process.

Aha-moment pivoting: Recognizing and correcting mistakes mid-reasoning.

The full training pipeline that lead to the final DeepSeek-R1 also involved:

Cold Start Fine-Tuning: small high-quality dataset to help achieving early stability.

Further RL training to improve the model's reasoning skills, particularly in reasoning-intensive tasks such as coding, mathematics, science and logical reasoning and language consistency. In doing so, they've overcome the problem of Qwen's QwQ reasoning model, which often mixes Chinese and English in its inner monologue.

Refining outputs via rejection sampling and Supervised Fine-Tuning.

The result

R1 is great in quality and way cheaper than similar models on the market.

We’ve seen this trajectory before:

October 2015: AlphaGo defeats the European Go Champion Fan Hui (5-0)

March 2016: AlphaGo beats Lee Sedol, one of the world’s top players (4-1)

December 2017: DeepMind introduces AlphaZero, mastering Go, Chess, and Shogi through self-play without human data.

AlphaZero is now superhuman in multiple narrow-domains, such as:

Estimated Elo in Chess: ~ 3,430 (Magnus Carlsen: ~2,850).

Estimated Elo in Go: > 5,500 (Ke Jie: ~3,700).

Similarly, DeepSeek-R1-Zero demonstrates that purely RL-driven training can lead to significant improvements in reasoning capabilities. While AlphaZero used Monte Carlo Tree Search (MCTS) to guide decisions, DeepSeek-R1-Zero relies on GRPO, a more scalable and efficient approach for LLMs.

The significance

Power of RL

DeepSeek-R1-Zero’s purely RL-based training is a breakthrough, showing that LLMs can autonomously develop advanced reasoning strategies without supervised fine-tuning.

This approach is cost-effective and scalable, reducing reliance on massive human-labeled datasets.

Autonomous Learning

Like AlphaZero, DeepSeek-R1-Zero demonstrates that self-improvement through RL is possible, even in complex domains like reasoning.

The emergence of behaviors like self-verification and reflection suggests that complex cognitive strategies can arise from simple, reward-driven processes.

Open-Source Contribution

Allows other researchers and projects like open-r1 to replicate and build on DeepSeek’s results.

Distillation Enables Effective Knowledge Transfer

Smaller models can achieve competitive reasoning by distilling patterns from larger RL-trained models.

A Stepping Stone Towards AGI

While still far from achieving Artificial General Intelligence, DeepSeek points the way for future research towards it. Their method is

Potentially prone to reward hacking

Budget-friendly

Full of massive potential

Looking Ahead

2025 has just begun, and 0120 will likely be as pivotal in the journey toward AGI as 0314 was.

No diminishing returns are in sight.