I have previously written an analysis of the significance of DeepSeek R1’s release. Since then, everyone and their mother has become aware that the small Chinese company has achieved a similar result to the Magnificent Seven on a fraction of the budget.

Stock markets are panicking and people are seeing bubbles bursting.

But 99.99% miss the bigger picture.

DeepSeek's R1 is significant in multiple levels, and the biggest is not its budget.

The biggest is that what Ilya Sutskever, chief scientist at OpenAI, saw in 2023 is now lies in plain sight for anyone:

LLM capabilities in narrow domains can be automatically improved through reinforcement learning.

In one word: ACCELERATION.

AlphaZero, as discussed earlier here, has reached superhuman capabilities in narrow domains (Chess, Go). What DeepSeek has demonstrated with their R1 model is that a similar, but not the same, approach is viable for LLMs.

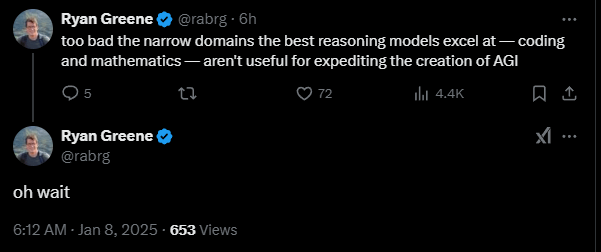

Mathematics, coding and reasoning are the key areas where this method will produce rapid capability acceleration in the models in the short term. The better the base model, the more.

DeepSeek’s findings have already been reproduced by students at Berkley on a small model called TinyZero, and Huggingface is currently replicating it on a larger scale in their open-r1 project.

What’s next?

The implications are far-reaching and it's hard to predict. What is certain:

In the coming months, powerful models with advanced reasoning will flood the AI-space.

Big companies are forced to release faster to keep their edge. The way they will do this is by minimizing security testing. You'd run out of fingers (and toes) counting how many safety researchers have left OpenAI already.

Researchers, with the help of the most powerful models such as o3, will race to crack other domains and plug them into the acceleration machine.